Enroll Now and Get Upto 30% Off

* Your personal details are for internal use only and will remain confidential.

Last updated 07/03/2025

Artificial intelligence is now woven into the fabric of much of our lives, whether in hiring and health care, finance, and security. It has become so pervasive that there is a need to probe its impact on society, and one of the very important aspects is the fairness of AI systems, which if ignored, can lead to biased decision-making and unfair societal norms. Fairness is essential to ensure that AI is good for everyone equally and has design principles that promote trust and inclusion.

Today, through this blog we will explore the reasons why fairness is essential when it comes to AI products. We will also look out for principles and other basics of AI products.

AI fairness means that AI makes fair and equal decisions for everyone. It should not treat some people better than others based on gender, race, age, or background. For example, if an AI hiring tool favors men over women or a loan approval system denies loans to certain ethnic groups, that’s AI being unfair.

Fixing AI bias means making sure AI:

Curious about AI and ethics? Check out What are some Ethical Considerations when using Generative AI to learn more.

AI is everywhere—from job hiring to healthcare, banking to security. But here’s the thing—AI is only as fair as the data it learns from. If AI systems pick up human biases, they can make unfair decisions that affect real people

That’s why fairness in AI is such a big deal. AI must treat everyone fairly, without favoring one group over another. But ensuring fairness is not easy. Problems like AI bias and bias in machine learning can sneak into AI models.

So, how do we fix this? Let’s look at how fair AI models work, the risks of unfair AI, and how we can build trustworthy AI systems.

When AI is unfair, it can cause serious harm. Here’s why bias in machine learning is a big problem:

Amazon once created an AI hiring tool, but it rejected resumes from women because it was trained on past hiring data that favored men. The company had to shut it down.

Some facial recognition systems struggle to recognize Black and Asian faces, making more mistakes for these groups than for White individuals. This led to wrongful arrests in some cases.

AI used in loan approvals sometimes rejects applicants from minority groups, even when they have good credit. This creates financial inequality.

When AI is unfair, it can lead to bad decisions, legal trouble, and public backlash. Companies must ensure fairness or risk losing trust.

For more on AI security, check out Generative AI in Cybersecurity.

Fairness in Generative AI means creating and using systems without bias, and treating all groups equally. This involves reducing bias in algorithms, as these systems can unintentionally reflect societal biases. It also means fixing any lack of diversity in training data to avoid biased outcomes.

Fairness through unawareness means ignoring certain features that might lead to bias or ensuring equal chances by making predictions equally accurate across different groups. This approach is often suggested in ethical guidelines for fair decision-making.

These biases must be eliminated through conscious effort at every stage of development and by not perpetuating discrimination in favor of building systems that will treat users fairly.

Fairness in artificial intelligence is necessary for user trust and acceptance, as well as regulatory compliance. If users think that an AI product treats them fairly, they are most likely to accept it.

International regulations, such as the GDPR in the EU, demand that AI products be developed and used in an ethical manner. Fairness implicates the product design, from training data selection to post-processing of model outputs, to eliminate discrimination and follow at least some standards of fairness.

In one example, Microsoft said it has reduced racial and gender bias across all demographics in its facial recognition systems; it also sets a standard for how AI should be developed fairly.

When fairness is not taken into account in AI products, it can lead to harm, including discrimination and legal actions. The examples include facial recognition tools that misidentify persons belonging to specific ethnic groups; this depicts gaps within AI when it comes to inclusiveness. These problems can result in serious legal challenges and make people lose confidence in AI solutions.

Societal Negative Impact: It is liable to reinforce stereotypes and inequality in society, that are harmful. For instance, biased algorithms in hiring tend to discriminate against specific groups, thereby reducing access, which further reinforces disparities; this is adverse not just to the individuals but to the greater society as a whole.

Legal and Financial Implications: More strict regulations in AI and the use of data will have implications for companies that lack fairness. Severe legal challenges mean costly fines and litigations draining their resources and distracting them from innovation. Financial repercussions can also serve to create an atmosphere of fear and uncertainty for businesses that do not take proactive action toward fairness.

Reputational Damage: Unfair AI practices can seriously damage a brand's reputation. Public outcry, especially on social media, can generate much negative publicity and lose the consumer's trust. The time and money required to restore a reputation once damaged are substantial factors affecting customer loyalty and brand value.

Loss of Competitive Advantage: With consumers and businesses becoming increasingly aware of ethical issues, not paying attention to fairness can result in a product being behind competitors. Organizations that pay more attention to fairness in AI systems will attract more customers who are socially responsible, while those that do not may lose business.

Inhibiting Innovation: Lack of diversity in developing AI may also mean failure to innovate or even lack of innovation as it tends to exclude diversity which could lead to innovation when it is ensured. No one's opinions or outlooks are considered to avoid fair treatment, so innovation, as well as unique solutions for the product, are bound to be lacking.

This risks being multifaceted to individuals, organizations, and society at large in terms of not accounting for fairness in AI products. Fairness is both a compliance matter and a strategic imperative that builds trust, protects the brand reputation, and sparks innovation.

Developers can adopt various techniques when developing AI to ensure its fairness. Some of these fairness-aware machine learning approaches modify model training algorithms as they will identify and respond to the biases during their development.

Other best practice approaches include conducting bias audits that check on the status of the AI systems at varied intervals for differences. These would include diverse datasets and ensure the model is also trained fairly while deployment considered post-processing adjustments in getting rid of residual biases of the outputs. This would help AI developers identify the early signs of bias, then correct it and ensure the process is fair over time.

Transparency in AI is important since it allows users and other stakeholders to understand and verify AI's decision-making processes. In fact, explainable AI models make it possible for the users to see how the decisions are made, giving way to feedback and further adjustments.

Being transparent on AI development means relating an AI system's workings clearly, its inability to perform certain tasks, and what criteria are actually driving decision-making processes. Trust and accountability can be made effective for the empowerment of transparency through user understanding.

Diversity has an important role in ensuring that the systems are fair, which means that a diverse set of perspectives is used to predict and correct biases. Diverse design teams bring unique insights into the user experience across demographics, leading to more inclusive outcomes from AI.

An example is Google, which has gone a long way in debiasing the AI systems by increasing team diversity through the improvement in applications such as Google Photos in terms of fairness. If companies work with different voices, their AI products may be biased, and biases can also be mitigated, contributing to both the inclusiveness and effectiveness of the AI product.

According to the report by Statista, As of 2024, Nearly 35% of the companies in this sector have brought at least the recommended fairness measures to life. Out of them, 2% claim to have fully executed all measures.

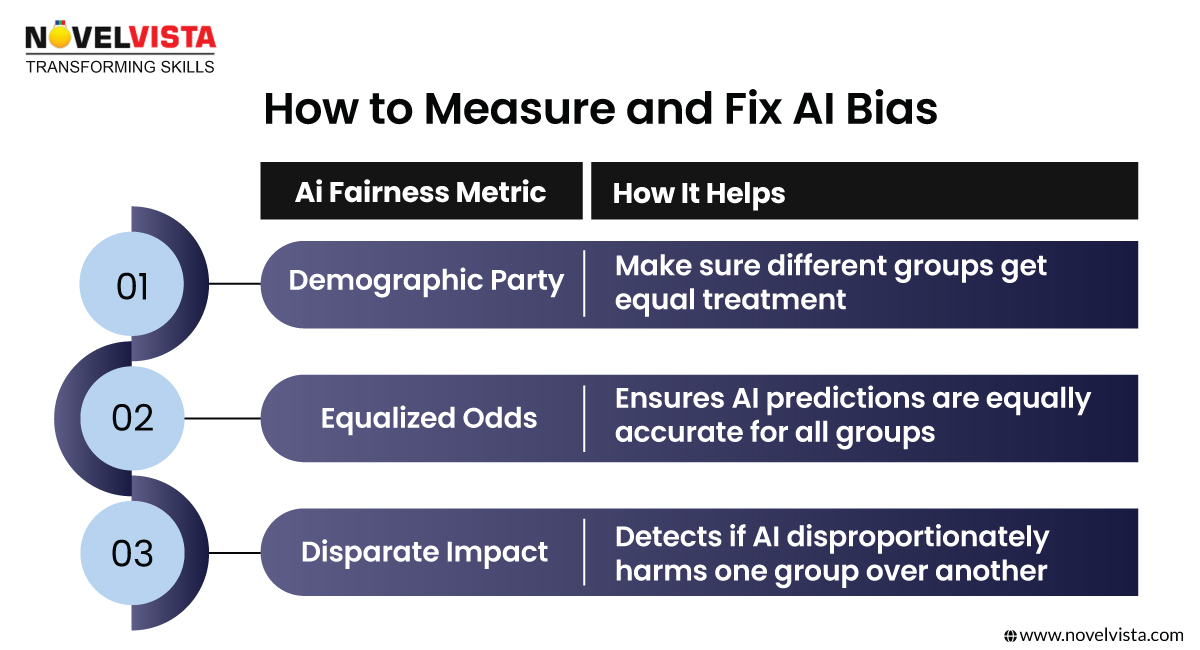

Developers use AI fairness metrics to check and fix bias in AI models. Here are a few key ones:

These fairness metrics help keep AI transparent and accountable.

Developers use special tools to ensure AI is fair and unbiased. Some of the most popular ones include:

By using these tools, AI models can be smarter and fairer.

For more insights, check out What is the difference between generative AI and predictive AI.

Governments are now pushing for AI fairness. The Information Commissioner’s Office (ICO) has set rules to prevent discrimination in AI.

New laws require AI to be:

If companies don’t follow these rules, they could face legal action or huge fines. Want to know how AI is shaping the future? Read What is the main goal of generative AI.

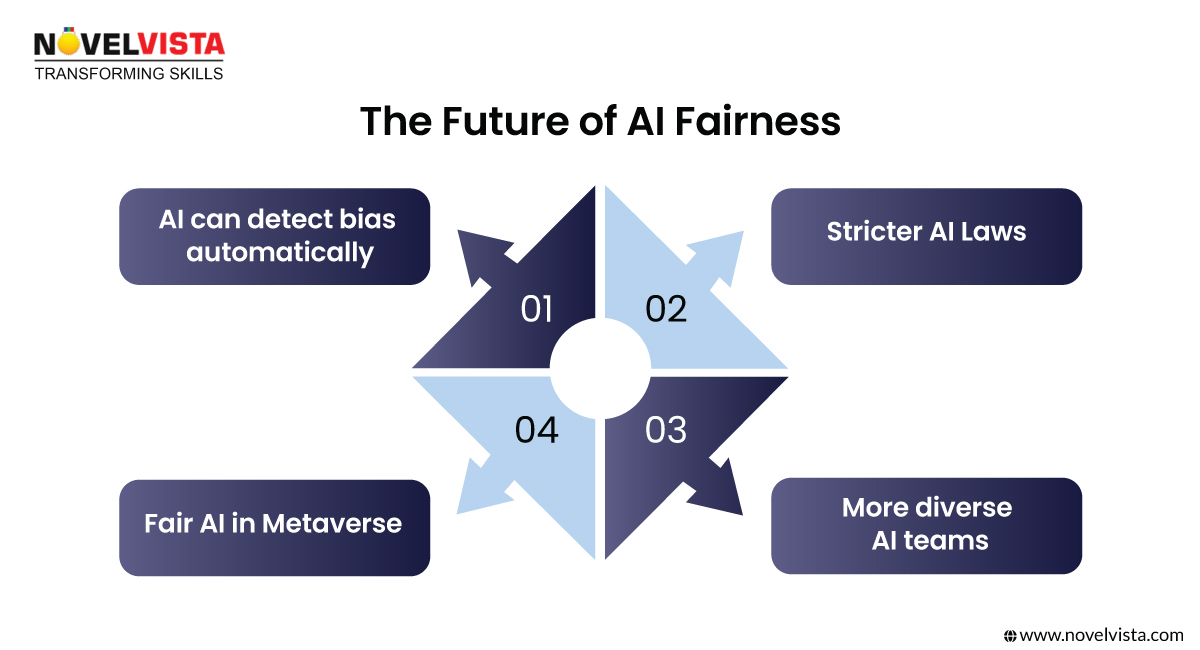

As AI becomes more powerful, ensuring AI fairness is more important than ever. Some future trends to watch include:

New AI models will be able to find and fix biases in real time.

Governments will introduce tougher laws to prevent AI discrimination.

Companies will hire more diverse teams to avoid one-sided AI training.

As the Future of Metaverse grows, AI fairness will become even more critical.

Finally, fairness in AI can be considered essential for providing AI systems trusted and reliant on people. In reality, AI products become inclusive and compliant with other standards to ensure long-term success once made with a focus on fairness.

Therefore, companies can simply develop the most reliable and legally strong AI solutions supportive of various users by pushing fairness foremost. Furthering the advancement of AI fairly will keep the world benefiting and progressing in line with all its societal impacts.

AI should help people, not harm them. Ensuring fairness in AI is the key to building trust and preventing discrimination. Companies, developers, and regulators must work together to:

AI fairness isn’t just a nice-to-have—it’s a must-have for a better, more just world.

Want to be a master in AI fairness? Start your Journey by learning the Generative AI Certification Course at NovelVista!

Topic Related PostVikas is an Accredited SIAM, ITIL 4 Master, PRINCE2 Agile, DevOps, and ITAM Trainer with more than 20 years of industry experience currently working with NovelVista as Principal Consultant.

* Your personal details are for internal use only and will remain confidential.