Have you ever thought, what are some ethical considerations when using Generative AI? Generative AI is a technology that has the ability to create new content, such as text, images, audio, and even videos, by learning from existing data. It has the power to transform industries like healthcare, entertainment, marketing, and education by boosting creativity, automating tasks, and personalizing experiences for users.

But as generative AI continues to grow, it’s important to think about the ethical challenges that come with it. Ignoring these issues could lead to serious problems like misinformation, bias, and privacy breaches.

In this blog, you’ll get to know about some of the key ethical concerns tied to generative AI. You’ll learn about topics like the creation of harmful content, copyright and intellectual property challenges, fairness and bias, privacy, transparency, job loss, human control, and accountability. Tackling these issues is essential for making sure we use generative AI responsibly and ethically. Let’s get started!

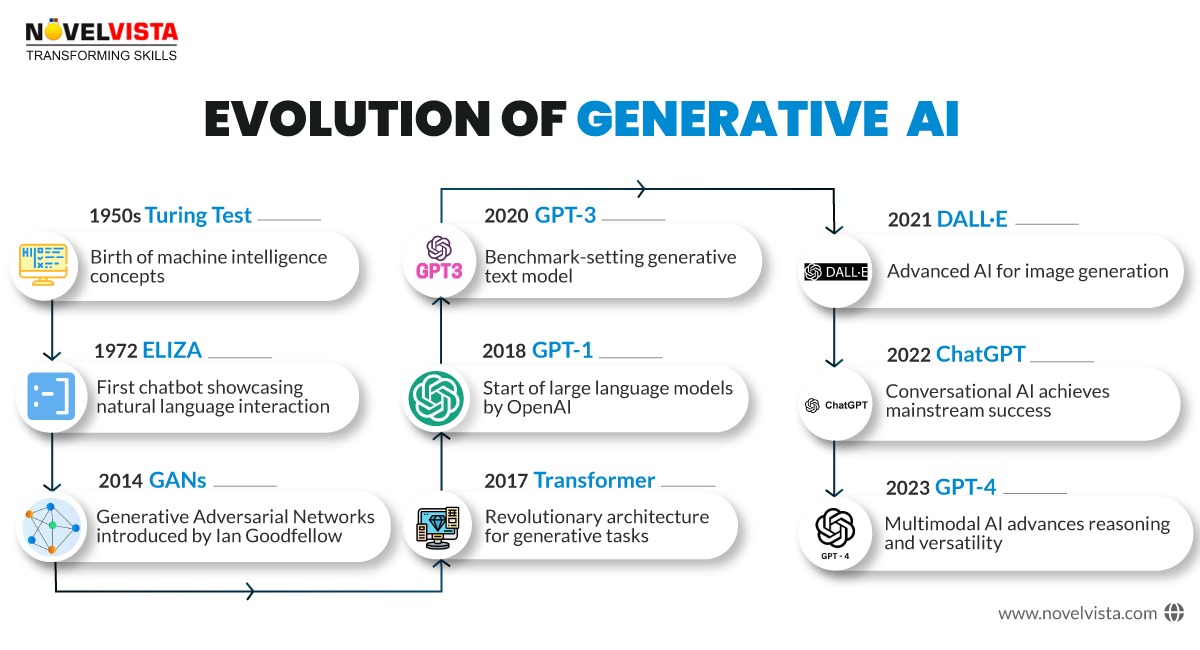

Alan Turing introduced the Turing Test, a criterion for deciding if a machine could think like a human, from where the modern research into artificial intelligence began.

ELIZA programmed by Joseph Weizenbaum simulated human-like responses using keywords and displayed predestined responses. Promising hints in the early future of HCI Natural Language Processing were signifying it as a practice.

Ian Goodfellow defined the GAN as two neural networks, one generator and the other as a discriminator competing to generate realistic output; as such, GANs revolutionized AI since they helped in producing extremely realistic images, videos, and data.

In his seminal paper 'Attention is All You Need', Vaswani along with co-authors propounded the transformer model which changed the entire face of NLP and AI. Its self-attention mechanism made it hugely efficient in operation as compared to any other model for translations, summaries, or generation of text.

OpenAI published GPT-1, a generative pre-trained transformer model trained through unsupervised learning over huge datasets. This opens the door for the genesis of language models that can scale and perform.

GPT-3, with 175 billion parameters, has succeeded in proving itself to be the most versatile writing machine to date; it can code and answer natural questions. It diverted the use of AI into areas of creativity as well as knowledge application.

OpenAI's DALL·E helped showcase the application of text-to-image generation. Attrited and technocentric, it revealed AI's ability to produce pictorial views from descriptions in words.

ChatGPT, built on GPT-3, became an accessible conversational interface, used in all walks of life for customer service, education, and entertainment, bringing AI to the people.

OpenAI's GPT-4 was multimodal, with the ability to process text and images, and showed better reasoning. It marked a step toward more comprehensive and human-like AI systems.

We know that Generative AI has successfully revolutionized creativity and productivity along with this it also offers the significant concerns and risks that businesses and professionals must attend to or address. Following are the different concerns and risks you must know.

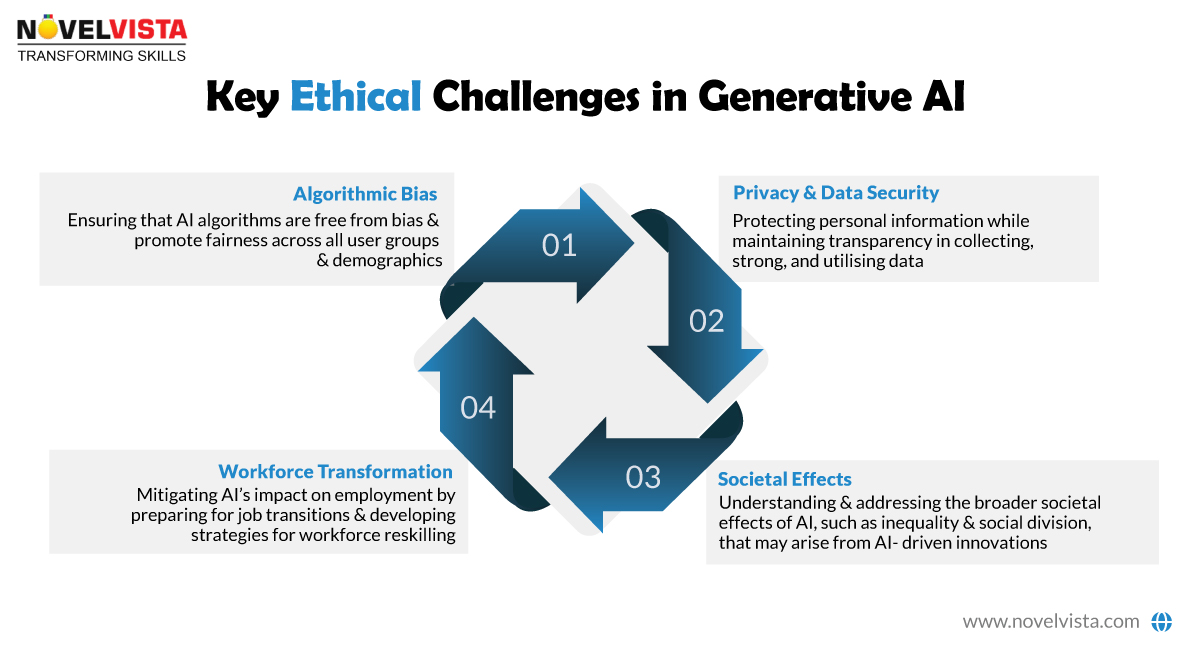

Generative AI modelsare trained on large datasets, but these datasets can have hidden biases that affect the AI's results. These biases might reinforce stereotypes, harm disadvantaged groups, and influence decisions in unfair ways.

Generative Artificial Intelligence tools help to create and generate realistic content through images, text and deep fakes, therefore it is used in disseminating wrong information that could be exploited in fraudulent activities, propaganda, and orchestrating fake identity among others causing threats to cyber threats as well as trust.

As Generative Artificial Intelligence often engages existing works in producing new content, there are various misconceptions about the distinctions of copyright. The entangling web of intellectual rights is likely to entrap a creator who may not even be aware that the produced content infringes them and the resulting damages would be legal or financial.

Hackers find Gen AI a wide avenue through which they can develop sophisticated phishing schemes or hack authentication systems. The security risk that comes with the misuse of artificial intelligence is that it attacks personal and organizational data.

Over-reliance on Gen AI can stifle human creativity and lead to job displacement, particularly in roles focused on repetitive or creative tasks. It also causes concerns about workforce reskilling and economic disparity.

So, what are some ethical considerations when using generative AI, including how to prevent misuse, protect intellectual property, and ensure fairness in its applications?

Generative AI works by spotting patterns in the data it's trained on, but if that data includes biases around things like gender, race, or culture, the AI can unintentionally pick up those biases. As a result, the AI’s output can sometimes reflect unfair ideas or lead to unjust outcomes.

These biased results can have real-world consequences, affecting things like job hiring, credit approvals, and the types of content you see online. For example, if an AI tool for screening job applications has learned from biased hiring practices that favor men, it might unfairly prioritize male candidates, leading to gender discrimination in hiring.

To reduce this risk, it’s important to train AI on diverse and balanced datasets. This helps ensure the AI learns from a wide range of experiences and perspectives, making it less likely to produce biased results. Along with diverse data, it's also important to have transparency in how AI systems are built and to regularly check for any biases in the system through audits.

Developers should follow ethical guidelines that emphasize fairness and nondiscrimination, making diversity and inclusion a priority in both the data and the design of AI models. By doing this, we can help create AI systems that are fairer and better reflect the diversity of the people they serve.

The use of personal data to train AI models has great privacy concerns, especially when consent is not obtained correctly. Individual rights might be violated if sensitive information is collected and used without the said agreement.

AI technologies that can mimic personal writing styles or voices may disobey an individual's privacy by copying their unique traits without knowledge or agreement.

To address these issues, effective data protection techniques must be implemented. This should include strict privacy rules, data encryption, and transparency about data collection and use. Businesses can protect personal information and build trust in AI technologies by following these actions.

When AI systems cause harm, like creating offensive or harmful content, figuring out who is responsible can be tricky. There aren't clear rules on who should be held accountable for what AI creates or does, leaving victims without help.

To fix this, we need to set up ethical oversight and strong legal guidelines that clearly define who is responsible—whether it’s the developers, users, or companies behind the AI.

By creating solid rules for how AI is built and used, we can ensure that there are systems in place to handle any problems and protect people from being harmed by AI technology.

AI systems can be so complex that it’s often hard to understand how they make decisions. This is called the "black box problem." It’s especially concerning in fields like healthcare or law, where AI's decisions can have serious consequences.

To solve this, we need explainable AI (XAI), which can clearly show how and why it made a certain decision. Helping people understand the limits of AI can manage expectations and encourage responsible use.

By making AI more transparent and easier to explain, we can build trust and ensure it's used ethically and responsibly.

The rise of generative AI has raised concerns that it would replace human creators in domains like art, music, and literature. This issue is reinforced by the possibility of employment displacement in creative industries due to automation. Companies have an ethical responsibility to balance innovation and social effects, ensuring that advancements do not come at the expense of human jobs.

To solve these problems, hybrid models must be promoted in which AI augments, rather than replaces, human ingenuity. By encouraging human-AI collaboration, we can improve creative processes while retaining the distinct contributions of human artists and inventors.

To use Generative AI ethically it's important to understand its various practices. As we all know Gen AI is one of the powerful tools that comes with the application in different fields such as from content creation to healthcare and education. However, its misuse can lead to ethical concerns like misinformation, privacy breaches and bias. Following are the different practices one should follow to use Gen AI ethically.

Inform about it and state that you have generated the content through artificial intelligence in the case of content, images, or videos that have been so generated. This way, developing transparency with your audience leads to trusting your audience and ensuring that the user knows the content's origin.

Do not use Gen AI to generate or spread any kind of misinformation, hate speech, or any other very serious content where stereotypes are considered. Output must be reviewed since they should match ethical standards and promote positive engagement.

Sensitive or personal data should be avoided while preparing any training modality or prompt for the AI model. Keep alignment with privacy laws, including theDigital Personal Data Protection Act, 2023 (DPDP Act)in safeguarding users' information.

Gen AI models might inadvertently reflect biases present in their training data. Also, regularly evaluate outputs for fairness and inclusivity. Use diverse datasets to decreasebias and fairnessand provide balanced results.

Generative AI should assist humans in forming opinions or judgments, not replace them. Make sure to have the human reviewedAI-generated contentto ensure it meets ethical and quality standards. Also, maintain accountability for the results produced.

Concentrate on using Gen AI to resolve real-world issues and generate value. Applications such as accessibility tools, personalized learning and medical research showcase ethical and beneficial uses of AI. With the help of these principles, individuals and businesses can harness the potential of Generative AI while reducing risks and ensuring ethical use.

To use Generative AI ethically it's important to understand its various practices. As we all know Gen AI is one of the powerful tools that comes with the application in different fields such as from content creation to healthcare and education. However, its misuse can lead to ethical concerns like misinformation, privacy breaches and bias. Following are the different practices one should follow to use Gen AI ethically.

Inform about it and state that you have generated the content through artificial intelligence in the case of content, images, or videos that have been so generated. This way, developing transparency with your audience leads to trusting your audience and ensuring that the user knows the content's origin.

Do not use Gen AI to generate or spread any kind of misinformation, hate speech, or any other very serious content where stereotypes are considered. Output must be reviewed since they should match ethical standards and promote positive engagement.

Sensitive or personal data should be avoided while preparing any training modality or prompt for the AI model. Keep alignment with privacy laws, including theDigital Personal Data Protection Act, 2023 (DPDP Act)in safeguarding users' information.

Gen AI models might inadvertently reflect biases present in their training data. Also, regularly evaluate outputs for fairness and inclusivity. Use diverse datasets to decreasebias and fairnessand provide balanced results.

Generative AI should assist humans in forming opinions or judgments, not replace them. Make sure to have the human reviewedAI-generated contentto ensure it meets ethical and quality standards. Also, maintain accountability for the results produced.

Concentrate on using Gen AI to resolve real-world issues and generate value. Applications such as accessibility tools, personalized learning and medical research showcase ethical and beneficial uses of AI. With the help of these principles, individuals and businesses can harness the potential of Generative AI while reducing risks and ensuring ethical use.

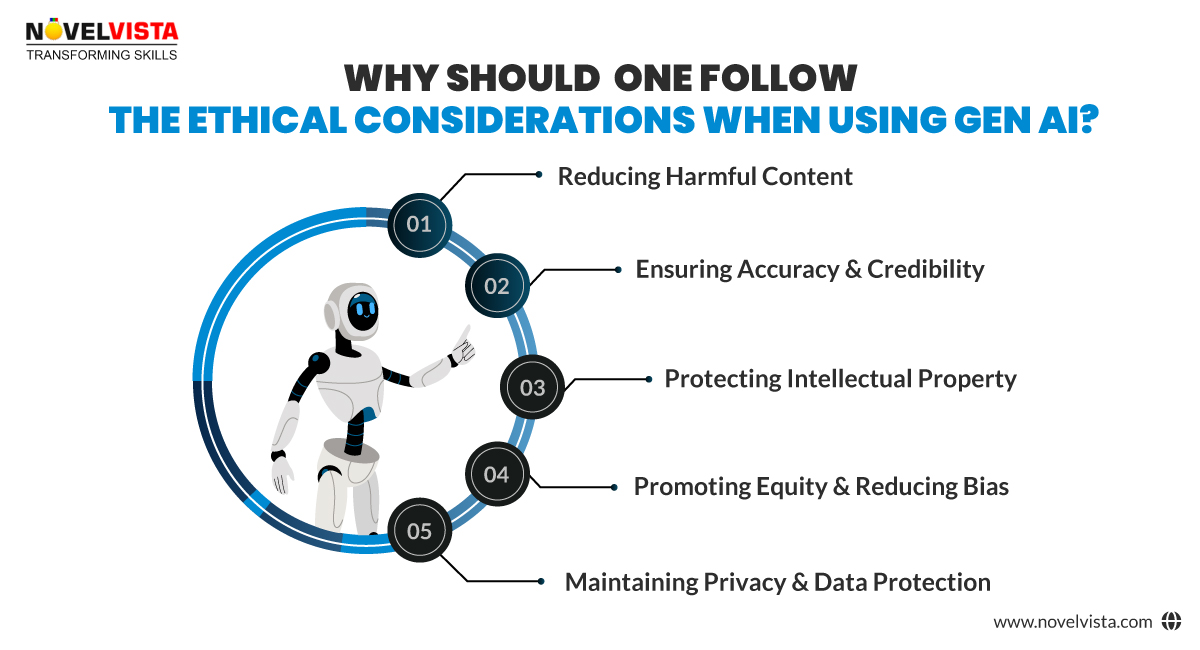

It’s important to follow ethical guidelines when usinggenerative AIto make sure it’s used responsibly and fairly. Here are a few reasons why:

Misinformation, hate speech, and deep fakes are all examples of harmful content that can be produced by generative AI. Such kind of content can mislead people and even hurt them. By following ethical norms, developers and users can help prevent misuse that could cause societal or personal harm, hence ensuring a safer digital world.

Generative AI models can sometimes give incorrect information because of biases in theirtraining data. To make sure the information is trustworthy, it's important to check data sources and be transparent. Following these ethical guidelines not only makes AI-generated content more reliable but also helps users think critically about the information they receive.

The application of generative AI raises concerns about copyright and intellectual property rights. Following ethical rules will help you manage these challenges. his helps protect creators' rights and keeps things legally safe and hassle-free.

AI outputs can sometimes reflect biases, reinforcing harmful stereotypes and prejudices. To tackle this issue, it’s important to use diverse datasets and conduct regular checks on AI systems. This helps ensure fairness and promotes inclusivity, making AI more ethical and balanced for everyone.

Unliketraditional AI, generative AI very often relies on large volumes of data, including sensitive information. Ethical principles emphasize the necessity of gaining informed consent and maintaining user privacy to prevent unlawful data access or exploitation.

⚖️ Uncover the ethics behind generative models.

To conclude, the use of generative AI raises several significant ethical difficulties, including the generation of damaging content, issues of misinformation, copyright concerns, bias, privacy, and accountability.

To manage these complications, tech developers, governments, and society must collaborate to develop and use technology responsibly.

Continuous adjustments are required to ensure that the benefits of AI are available to all industries while adhering to ethical values. By encouraging an open discourse, we can embrace the potential of generative AI while remaining true to our beliefs. Learn more about Generative AI in ourNovelVistaGenerative AI certification courseand take your career to the next level! What are your suggestions on how to best attain this balance of Gen AI and ethical considerations?

Confused about our certifications?

Let Our Advisor Guide You