Looking for DevOps SRE questions answers to crack interviews but not getting the best ones. Don’t worry! We got you. Site Reliability Engineering brings great job opportunities for you. We cover everything you are looking for. Let’s Start.

Understand, what is SRE?

Computer systems are developed to be reliable. A system is reliable if, most of the time, it performs as intended and isn't prone to unexpected failures and bugs. The site engineer is responsible for the stability and performance of websites, mobile applications, web services, and other online services. SREs are in charge of monitoring the performance of websites and applications to check for issues and make sure they are running smoothly.

Site Reliability Engineeringis usually a bridge between the Development and Operations Departments. It's the discipline that incorporates aspects of software engineering and applies them to infrastructure and operation issues. You can also get in-depth details regarding the SRE on our blog, AnInsight to Site Reliability Engineering.

In today's industrial sector, more and more jobs are opening up as a result of progress in technology. The position of SRE is one of those that has been around for that long. Hence, we have prepared the top 22site reliability engineer interview questionsfor you.

The job role of the Site Reliability Engineer includes the following responsibilities:

The job responsibilities ofSREcan be differentiated into two categories: technical work and process work. Technical ones include things such as writing code to automate tasks, provisioning new servers, and troubleshooting outages when they occur.

Besides this process, one includes things such as on-call rotations, incident response, and reviewing post-incident reports.

- Developing software to help DevOps, ITOps and Support Teams

- Fixing support escalation issues

- Optimizing on-call rotations and processes

- Documenting tribal knowledge

- Conducting post-incident reviews

Now, let's get intoDevOpsSRE interviewquestions and prepare ourselves. Following are the most commonly asked Site Reliability Engineering interview questions, which will help you understand how interesting it actually can be.

Download the SRE Interview Q&A Guide – 2026 Edition

Master 50+ real questions asked at Google, Netflix, Amazon & Meta.

Most asked Site Reliability Engineering (SRE) interview questions

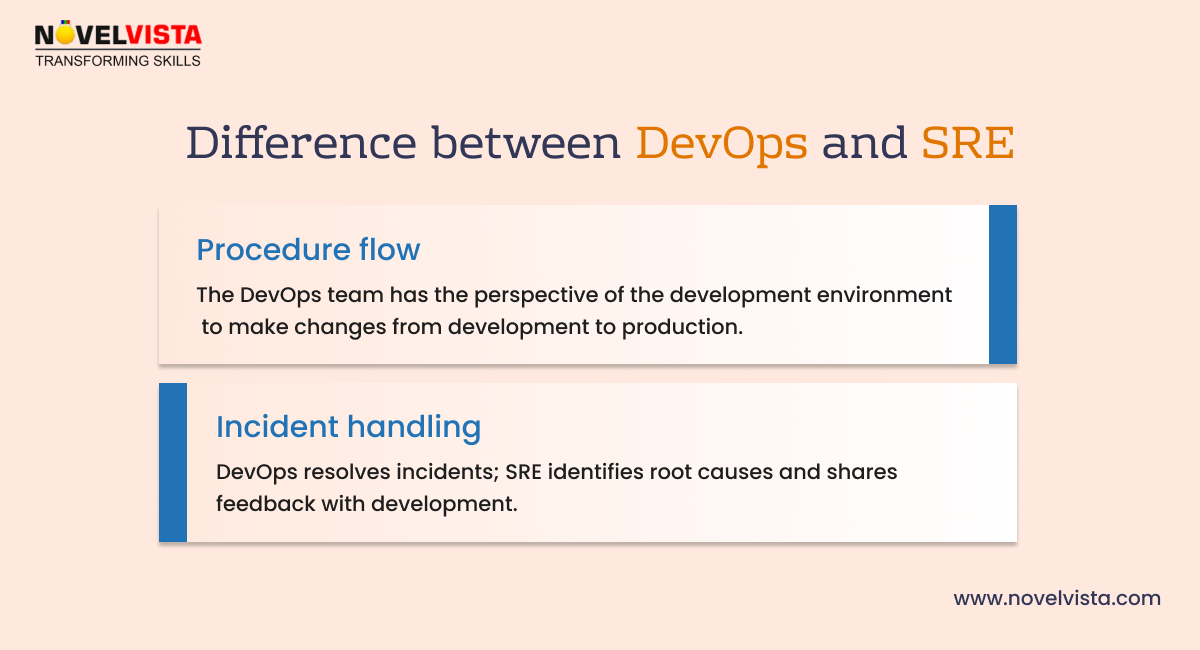

Q1. Differentiate between DevOps and SRE.

Answer: Implementing new features:DevOpsis responsible for developing new feature requests to the product, whereas SREs ensure those new changes don’t increase the overall failure rates in production.

Procedure flow:The DevOps team has the perspective of the development environment to make changes from development to production. SREs have a viewpoint of production, so they can make propositions to the development team to border the let-down rates notwithstanding the new variations.

Incident handling:DevOps teams work on the incident feedback to mitigate the issue, whereas SRE conducts the post-incident reviews to identify the root cause and document the findings to offer feedback to the core development team.

Q2. Why do you want to do a job in SRE?

Answer:I am drawn to a career in the SRE sector due to its dynamic and challenging nature. It combines my passion for software development and operations, which provides the unique opportunity to bridge the gap between these two crucial aspects of technology.

The SRE role is well-aligned with my goal of ensuring the reliability, scalability and efficiency of systems that contribute to a seamless user experience.

Furthermore, I am eager to contribute to the growth of a company and am confident that my proactive approach and problem-solving abilities will make me a valuable member of the team. I am particularly interested in exploring career opportunities through Executive Search & IT Recruitment firms, as they offer access to a wide range of exciting roles and companies.

Q3. Do you know anything about SLO?

Answer:The SLO stands for Service Level Objective, which is the agreement within the SLA about a specific metric, such as uptime or response time.

They are agreed-upon targets within an SLA, which might be achieved for each activity, function and process to provide the best opportunity for consumer success. It also includes business matrices like conversion rates, uptime and availability.

Q4. What is Data Structure? Elaborate some of them.

Answer:Thedata structureis the way of organizing and storing the data in the computer so that it can be accessed and manipulated efficiently.

There is a wide range of data structures that serve various purposes, and the choice of the specific data structure depends on the needs of the algorithms or operations being performed.

Arrays, Linked Lists, Stacks, Trees, Heaps, and Hash tables are the types of data structures.

Q5. How do you differentiate between process and thread?

|

Process |

Thread |

|

When the program is under execution then it’s known as a process. |

The segment of the process is known as the thread. |

|

It takes the maximum time to stop. |

It consumes less time to stop. |

|

It requires more time for work and conception. |

It takes less time for work and conceptions. |

|

When it comes to communication it is not that most effective. |

It is much more effective in terms of communication. |

|

If one procedure is obstructed then it will not affect the operation of another procedure. |

If one thread the obstructed then it will affect the execution of another process. |

Q6. Elaborate on the Error Budgets and what error budgets are used.

Answer:An error budget is how much downtime a system can afford without upsetting consumers, or it is also known as the margin of error permitted by the service level objective.

It encourages the teams to minimize actual incidents and maximize innovation by taking risks within acceptable limits.

Q7. What is the definition of error budget policy?

An error budget policy is used to track if the company is meeting contractual promises for the system or service and prevents it from pursuing too much innovation at the expense of the system or service’s reliability.

Q8. Which activities help reduce toil?

Answer:Activities that can reduce the toil are creating external automation, creating internal automation, and enhancing the service so that it does not require maintenance intervention.

Q9. What are the Service Level Indicators?

Answer:A service level indicator is the specific metric that helps businesses measure aspects of the level of services to their consumers.

SLIs are smaller sub-sections of SLOs, which are, in turn, part of SLAs that have an impact on overall service reliability. They help businesses identify ongoing network and application issues to lead to more efficient recoveries.

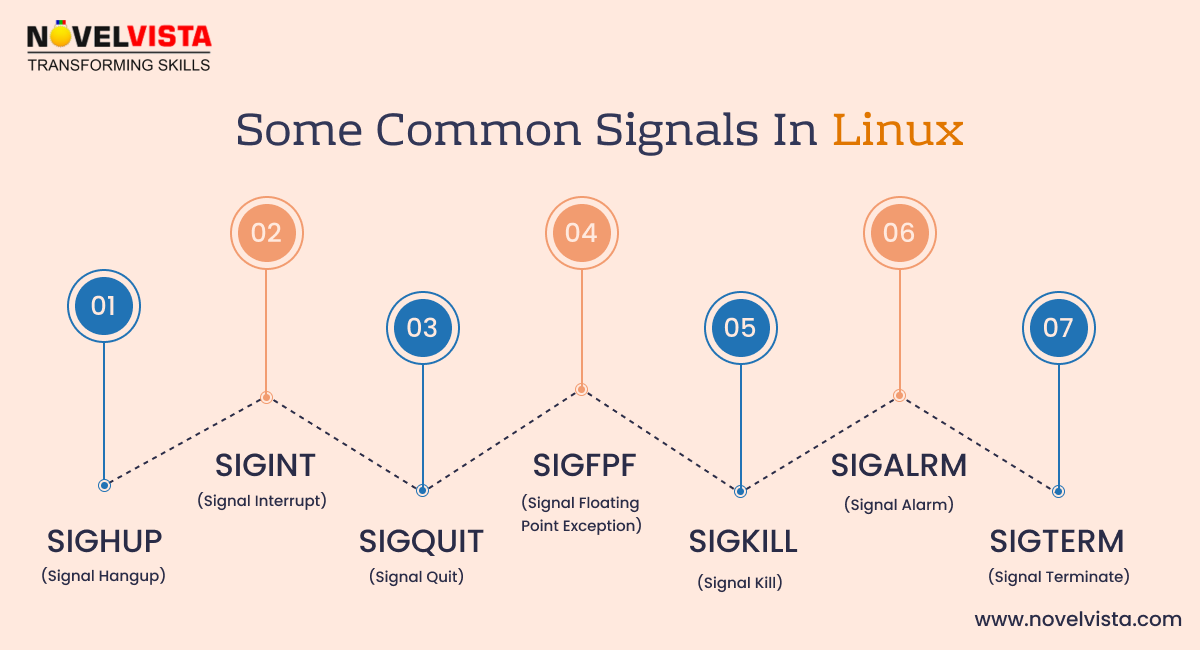

Q10. List down the Linux signals you know.

- SIGHUP

- SIGINT

- SIGQUIT

- SIGFPF

- SIGKILL

- SIGALRM

- SIGTERM

Q11. Do you know TCP?

Answer:Transmission Control Protocol, which stands for TCP, is one of the main protocols of the Internet Protocol suite. It lies among the application and network layers, which are mainly used to offer reliable delivery services. It is the connection-based protocol for communications that supports the exchange of messages between different devices over the network.

Q12. List down a few TCP connection states.

- LISTEN:The server is listed on a port like HTTP.

- SYNC-SENT:I sent a SYN request and waiting for a response.

- SYN-RECEIVED:The server waits for an ACK, which occurs after sending an ACK from the server.

- ESTABLISHED: A 3-way TCP handshake has been completed.

Q13. What is defined as an Inode?

Answer:Inode is the data structure in the UNIX, which includes the metadata about the file. Some of the items in the inode are mode, OWNER (UID, GID), size, time, and time.

Q14. What are the Linux kill commands and their functions?

Answer: Killall:This command is used to kill all the processes with a particular name.

PKill:This command is like kill all, except it kills only processes with partial names.

Xkill:This command allows users to kill the command by clicking on the window.

Q15. What is cloud computing?

Answer:Cloud computing refers to the practice of storing and accessing data and applications on remote servers hosted over the internet, as opposed to local servers or the computer's hard drive.

Cloud computing, often known as Internet-based computing, is a technique in which the user receives a resource as a service via the Internet. Files, pictures, papers, and other storable materials can all be considered types of data that are saved.

Q16. Describe the functions of the ideal DevOps team.

Answer:Basically, the functions of the ideal DevOps team can't be precisely defined. As we know, the DevOps team bridges the development and operations departments and contributes to continued delivery.

The perfect DevOps team cooperatively combines software development and IT operations to improve productivity, speed, and dependability across the software delivery lifecycle.

Among the responsibilities are continuous Integration, automated testing, deployment automation, monitoring, and cultivating an environment of communication and cooperation between the development and operations teams.

Q17. What is Observability, and how can we improve a business's system observability?

Answer:Observability strongly emphasizes gathering and analyzing information from various sources to comprehend a system's behavior as a whole.

Teams can efficiently monitor, debug, and optimize their systems thanks to the core analysis loop, which is a continuous cycle of data gathering, analysis, and action.

To maximize observability, discern the data flowing in an environment, focusing on relevant types for goals. Distill, curate, and transform data into actionable insights, providing valuable clues about DevOps maturity.

Q18. What is the DHCP and its use?

Answer:The Dynamic Host Configuration Protocol, or DHCP for short, is a protocol that allows IP addresses to be distributed throughout a network quickly, automatically, and centrally. Additionally, it is used to set up the device's DNS server details, default gateway, and subnet mask.

It's used to automatically request networking settings and IP addresses from the Internet service provider (ISP). Also, the requirement for manual IP address assignment to all network devices by users or network administrators is lowered.

Q19. Elaborate on the difference between snat and dnat.

|

SNAT |

DNAT |

|

A single public IP address can be shared by several internal devices thanks to SNAT, which changes the source IP address of outgoing packets. |

Incoming packets' destination IP address is changed by DNAT to route traffic to particular internal servers. |

|

For packets exiting a network, it is often used to transform the private address or port into the public address or port. |

Incoming packets having a public address or port as their destination are often redirected to a private IP address or port within the network. |

|

It allows multiple hosts on the inside to get any host on outside. |

It allows multiple hosts on the outside to get the single host on inside. |

Q20. What are Hardlink and Soft Links? Suggest an example.

Answer: Hard Link:A hard link is a duplicate of the source file that acts as a pointer to the original, enabling access to it even if the source file is moved or erased. Hard links are different from soft links in that changes made to one file affect other files, and the rigid connection persists even if the original file is removed from the system.

Soft Link:A brief pointer file that connects a filename to a pathname is called a soft link. Like the Windows OS shortcut option, it's nothing more than a shortcut to the original file. Without the actual contents of the file, the soft link functions as a reference to another file. Users can remove the soft links without impacting the contents of the original file.

Example:$ novel hard link. file

Q21. How will you secure your docker containers?

Answer:With the help of the following steps, I will keep my docker containers safe:

- Carefully select third-party containers.

- Turn on Docker content trust.

- Establish a resource cap for each of your containers.

- Examine a third-party security instrument.

- Employ Security on Docker Bench

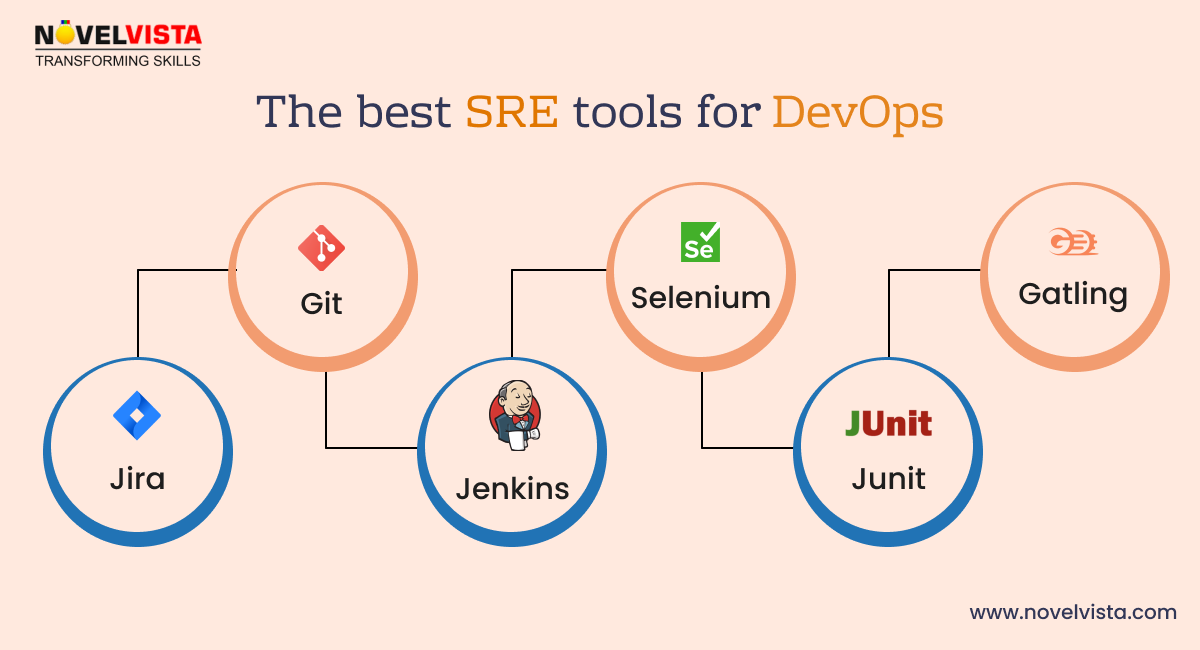

Q22. Describe the best SRE tools for DevOps.

- Jira:It aids in organizing, monitoring, and overseeing software development initiatives. It makes it easier for teams to work together and permits the development of thorough roadmaps.

- Git:For cooperative development and version control.

- Jenkins:Jenkins is an automated server that facilitates code development, testing, and deployment. To automate pipelines for continuous integration and continuous delivery (CI/CD), it may be coupled with Git.

- Selenium:An open-source program for automating browser tests that are helpful for web application development.

- Junit:One popular Java testing framework for unit testing is JUnit (also known as TestNG).

- Gatling:It is a web application performance testing tool.

23. What is Site Reliability Engineering?

It is a discipline that combines software engineering and system administration to ensure that those systems can scale and can be relied upon. Development of efficient operational processes, monitoring the performance of systems, and proactively fixing issues are focused on by site reliability engineers. One way they establish a potential trade-off between the speed of development and stability of the system is with SLIs, SLOs, and error budgets.

24. What are the benefits of Multithreading?

Multithreading offers several advantages, especially in improving the performance and efficiency of applications:

- Parallel Execution:It allows multiple threads to run simultaneously, making better use of multi-core processors.

- Improving Performance:Threads can be assigned to run such tasks as I/O operations that otherwise would make a program stall, thus enhancing responsiveness.

- Utilization of Resources:Multithreading minimizes idle CPU cycles by keeping the processor busy running other threads.

- User Experience:In GUI applications, multithreading ensures the interface remains responsive while background tasks are executed.

- Scalability:It allows the system to do multiple things simultaneously, thus it is scalable with respect to increased workload.

- Modularity:Code is often more modular, as different threads handle distinct parts of a task or application.

25. What performance monitoring tools are you familiar with?

I have hands-on experience with several SRE tools. Here are a few examples:

- Prometheus:For collecting metrics and monitoring systems in real time. I have used it for setting up alerts and visualizing data through Grafana.

- Grafana:To create detailed dashboards for monitoring application health, infrastructure performance, and custom metrics.

- New Relic:For application performance monitoring, especially for identifying bottlenecks in production environments.

- Datadog:For end-to-end monitoring of systems, servers, applications, and services, along with alerting capabilities.

- Nagios:Used for monitoring servers and ensuring uptime. I have configured custom checks for services.

26. Definition of Service Level Objectives

It can be an SLO to succeed in getting 99.9% uptime or even 95% successful API responses. Specific goals can make the slant of teams around a major aspect of performance. Paying every effort to achieve SLOs can ensure that the users find a service well meets their expectations, without having to compromise operational efficiency.

27. Describe APR

APR usually stands for 'Annual Percentage Rate,' but in the context of SRE or tech, it might have a different meaning depending on the context. Such as, if the APR you refer to relates to something like Application Performance Reporting, I can describe tools and methods I've used for generating and interpreting performance reports across applications. Otherwise, I'm happy to elaborate further.

28. Explain the concept of Toil in SRE.

Toil is defined as repetitive, manual, operant repeated tasks that are of no worth toward improving a system in the long run. Like, if the reboot of servers or manual deployments are performed repeatedly. SREs tend to minimize toil with automation, concentrating more of their time on high-impact activities that maximize system performance and reliability, and lastly overall efficiency improvement.

29. How Will You Approach Incident Management?

Incident management starts with rapid detection and response to minimize downtime. SREs use monitoring tools to identify problems on the way to resolution, keeping the stakeholders informed of the status. Thereafter comes a post-incident and root cause analysis that entails improved strategies for future avoidance along with enhancements in the system.

30. What is the importance of monitoring and observability in SRE?

Monitoring and observability are key aspects in allowing any SRE to get a picture of a system's health. Monitoring alerts to a problem, while observability gives further insight into how well the system is performing, allowing the problem to be addressed before it takes place. Together, they act as a monitoring camp through which SRE can maintain a reliable system by preemptively tracing failures before they get to the end users or business processes.

31. Distinguish between proactive and reactive monitoring.

Proactive monitoring is the setup of alert thresholds ahead of an event which might be defined as pre-emptive monitoring, predictive tendency analysis at the system level is a particular example. Reactive monitoring waits until an event occurs to take action concerning that event-providing means to speed up the process of finding and fixing any issues, in short, proactive plus reactive monitoring combined hold up system reliability.

32. What monitoring and logging tools have you used?

The popular tools currently in use for real-time monitoring and visualization with deep metrics in system performance are Prometheus and Grafana. To use logging with logs, perhaps ELK Stack (Elasticsearch, Logstash, Kibana) and Splunk are best for analyzing logs, looking at patterns, and troubleshooting issues with enhanced overhead visibility and quick solutions. It also helps the team to reduce SRE challenges.

33. How do you ensure high availability in a system?

High availability comes from redundancy, failover mechanisms, and getting rid of single points of failure. I design systems with load balancing using multiple servers or data centres, implement failover strategies, and ensure backups are available. Monitoring and auto-scaling also help to handle unexpected traffic surges without system downtime.

34. What does a playbook refer to in the context of SRE?

The Playbook is the documentation set of procedures to follow related to specific operational activities or incidents. For example, steps to be taken when a service is down, or in the case of deployment rollback, can be included within this playbook. This ensures that everyone on the team can take action promptly, thus reducing response time in emergencies.

35. Define what chaos engineering is

Chaos engineering is testing the candidacy of a system in failure by purposefully injecting faults to stress its resilience during a failure, thus checking for weaknesses and failures and improving the systems to act against unexpected behaviour. Teams make their systems even more robust, simulating how the system should behave in a whole system outage.

36. What is Configuration Management?

Configuration management is done using tools such as Ansible, Puppet, or Chef to automate and manage all consistent configurations for servers and environments. The configuration management tools avoid manual configuration errors and make system deployments and operations easy to scale. It creates consistent repeatability for the management of infrastructure.

37. What is the role of automation in SRE?

Toil being manual and repetitious activities is what you'd want to minimize through automation in SRE. System reliability and efficiency may be enhanced through deploying, scaling, and monitoring through automation by SRE. Enables the guys to get busy innovating and tackling tough problems instead of wasting time doing routine operations.

38. State one instance when you improved system performance

By analyzing bottlenecks and applying some caching techniques, we have optimized a high-latency service. A distributed caching layer was introduced, database queries were optimized, and these two changes resulted in a very large decrease in time response and improvements in overall user experience. Because we improved performance, customer satisfaction has increased markedly.

39. What is capacity planning and why is it important?

Capacity planning is the forecasting of resource needs in future, based on current usage trends along with the growth that is expected. It ensures that a system can handle increased traffic or demand without allowing performance to degrade. Effective capacity planning prevents bottlenecks, ensuring that the user experience is smooth even during peak usage periods.

40. How do you manage software deployments to minimize the risk?

To reduce the risks of deployment, I have strategies such as canary releases, where changes are rolled out to a small subset of users before a full rollout. Blue-green deployments allow switching between two identical environments, reducing downtime. Automation and continuous integration pipelines also help ensure smooth, error-free deployments.

41. What is the importance of postmortems in SRE

Learning from incidents is possible only through postmortems. They examine the root cause of failures, assess how the incident was handled, and identify improvements. A blameless postmortem culture encourages transparency and learning, leading to preventive actions, process improvements, and more resilient systems to avoid similar issues in the future.

vbnet Copy code42. How do you handle on-call responsibilities?

On-call responsibilities involve the readiness to react to an incident within a certain time. I make sure I have monitoring tools available and am aware of the escalation paths. While on call, I maintain concentration on finding the root cause of the problem, resolving it in the shortest time possible, and ensuring a seamless handover to the next team member when required.

43. What is load testing?

Load testing evaluates how a system behaves under various traffic loads, and hence it will identify bottlenecks and weaknesses. Through simulating high traffic, we can measure the system's ability to withstand increased demand. This helps scale systems efficiently while keeping performance stable during traffic spikes or surges.

44. How do you prioritize reliability improvements against new feature development?

Error budgets guide prioritization, balancing the need for new features with maintaining service reliability. If reliability falls below the defined SLO, resources shift towards addressing system issues. This ensures that reliability doesn't suffer due to new feature development, maintaining a balance between innovation and system stability.

45. What is a blameless culture, and why is it important in SRE?

A culture of blamelessness is more on learning from the failure rather than holding a person liable for the cause. It enhances open communication whereby teams can take time to scrutinize incidents without fear of reprisal from punishment. Continuous improvement is always encouraged, team members trust each other, and the resolution of incidents brings better outcomes.

46. How do you manage secrets and sensitive data in your systems?

Sensitive data can be stored safely and managed, ensuring it's encrypted both in transit and at rest, through tools like HashiCorp Vault or AWS Secrets Manager. Least privilege access principles are used to restrict access to retrieving the necessary secrets for their operation only to those services or users authorized to have them.

47. What is the meaning of SRE? What are some roles and responsibilities of SRE?

SRE full formstands for Site Reliability Engineering, a discipline that combines software engineering with IT operations to ensure scalable and reliable systems. Theresponsibilities of Site Reliability Engineerinclude monitoring system performance, automating repetitive tasks, implementing incident response strategies, and managing service-level objectives (SLOs). They also handle error budgets to balance system reliability with feature delivery. SREs aim to enhance system efficiency and reduce downtime.

48. How does version control fit into the configuration management strategy?

It allows you to track changes made in configuration files with a history, and if issues arise, rollbacks are easier. It provides consistency in all environments and allows team members to collaborate on tasks. With the use of version control on configuration files, you can have reproducibility and transparency in handling infrastructure.

49. How do you manage database migrations when working in a live environment?

I handle database migrations in a live environment by doing the following: careful testing in staging environments, use of version-controlled migrations with Flyway or Liquibase, rolling up live migrations, downtime-mitigated migrations in off-peak hours, and having backup strategies on hand for possible failures.

50. What have you learned about disaster recovery as an answer to such questions?

It covers all sorts of automated periodic backups, multi-region replication of data, and predefined recovery procedures. I make it a point that disaster recovery plans are always tested on a regular basis so that when systems must restore, they can do so very quickly, without causing too much data loss or downtime, thus ensuring continuity in business operations and reliability in service delivery.

51. How do you remain updated on all the findings and trends in SRE technologies?

I attend industry conferences, participate actively in online forums and communities, subscribe to newsletters, read technical blogs, and continue learning through courses and certifications. All these ensure that I do not remain aloof towards emerging tools, practices, and methodologies that would permeate into making systems more reliable.

52. What is the difference between DevOps and SRE?

DevOps vs SREshowcases two complementary approaches to managing software delivery and operations. DevOps focuses on fostering collaboration between development and operations teams, emphasizing culture, automation, and continuous integration/continuous delivery (CI/CD). SRE, on the other hand, applies engineering practices to ensure system reliability, using concepts like error budgets and service-level objectives. While DevOps drives process improvement, SRE prioritizes system performance and reliability. Together, they enhance efficiency in modern IT practices.

Conclusion

The abovesite reliability engineer interview questionsare most of the communal questions that will help you to prepare for the interview. With the help of this, you will fill much more acknowledged.

AtNovelVista, we hope you understand the practical and theoretical knowledge ofDevOps SRE interview. It allows you to gather details and demonstrate your interest. You can leave a positive impression on the interviewer.

SRE interview questions and answerswill not only help you with the interview but also help you develop basic understanding of SRE. To explore more, make sure to join ourSRE Practitioner Training & Certification.

Author Details

Akshad Modi

AI Architect

An AI Architect plays a crucial role in designing scalable AI solutions, integrating machine learning and advanced technologies to solve business challenges and drive innovation in digital transformation strategies.

Confused About Certification?

Get Free Consultation Call